From Ad-Hoc Checks to Automated Institutional Knowledge

Ad-hoc Checks, Siloed Knowledge

"I reviewed three PRs in one day. Each touched the core metrics but each developer checked different things. One caught a revenue metric issue, another completely missed it. Not because they weren't good at their job, they just didn't know what they don't know. "

"I know what checks are important when certain columns are touched or impacted. These validations are in my personal notebook. I just don't have time and a proper way to share with the team, especially the one who recently onboards."

If that sounds familiar, you're not alone. Maybe you're a reviewer or senior data developer who already know what to check. Or maybe you're someone who submits a PR with fingers crossed, hoping the reviewer catches issues you might have missed.

This isn't just a process problem. It's a knowledge problem.

Checks aren't just the validation steps. They're artifacts of domain knowledge, learned over time through incidents, bugs, and experience.

The Screenshot Problem

We watched data developers validate their work during development. They knew what they changed. They knew how to validate the changes: a profile diff here, a custom query there, a value diff on a specific column. Each validation was a check.

What they don't know is how to validate the impacts.

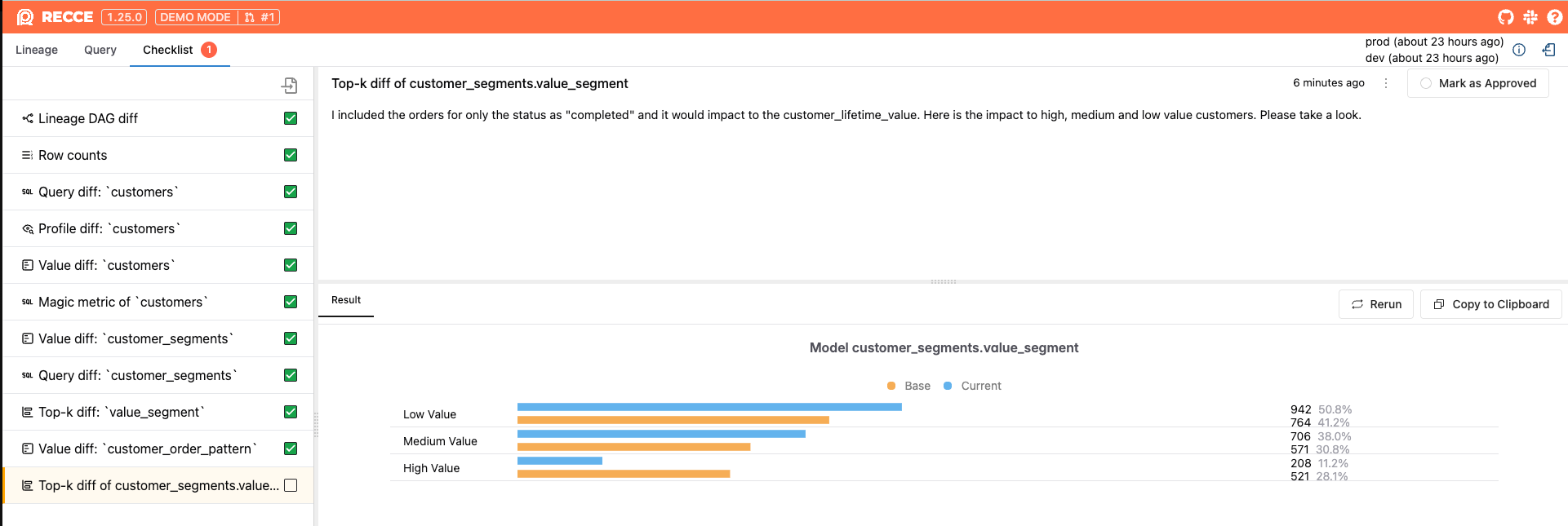

In this PR, I modified the CLV calculation within customers model to consider only "completed" orders. I validated the row counts stayed the same and was no schema change. I know what I’ve changed. However, I also know this change would reduce the numbers and amount of orders for CLV, so the CLV of each customer would be impacted. I just don't know if the impact is "correct" and acceptable to the marketing team.

Data developers told us their manual process:

- Prepare two datasets for stakeholders

- Do the data diffing

- Export results to spreadsheets or screenshots

- Explain why changes are expected and what stakeholders need to validate

They need collaboration and the domain knowledge from reviewers/stakeholders.

We built checks and checklists to streamline this:

- Data is ready in Recce for diffing

- Do the data diffing which one-click profile diff, custom query. Each is a check

- See results instantly

- Add checks to the checklist with context: why this is expected, what stakeholders should validate

Checklist is Useless without Collaboration

However, our open source users didn't use checklist.

One developer told us: "Why should I add this validation into checklist? I validate on my local machine. I don't want to ask the stakeholder install Recce open source and setup the two environments.. Some of then don’t have experience on terminal command and IDE. If they want to see the result, I just copy the result to clipboard and paste the result image in to a Slack thread."

It felt good enough. Until it didn't.

Weeks later, the same user asked how to crop screenshots better, like adjusting enough information and zoom in focus to make the result clear.

That didn't make sense: The data engineers were spending time perfecting screenshots instead of validating data.

The Cloud Unlock

When teams moved to Recce Cloud, everything changed. The same developers who ignored checklists locally told us: "We love checklist!"

With Recce Cloud, the checklists are a really powerful feature. We can collaborate on validation directly in the PR.

— Fabian Jocks, Technical Lead / Data Team Lead at vaidukt

Share a link. Data developers show the validation results with what they've checked, why they did these validations, the exact impacts with numbers and charts. Ask the stakeholders to validate.

Click a link. PR Reviewers or stakeholders who cannot access GitHub/GitLab join the validation with full context. Ask questions or request more checks.

Recce Cloud unlocks sharing and the collaboration. Checklists finally make sense.

Checks as Institutional Knowledge

That's when it clicked: checks aren't just validation. Checks are the knowledge about the data project that we capture from each PR.

Using the same example PR, we learned that marketing team care about the high/medium/low customers segments and we can show that by top-k diff on customer_segments.value_segments. Next time the CLV is modified or impacted, we want to make sure this check is preformed.

The value segments top-K diff

You may immediately think if this can be automatically done next time.

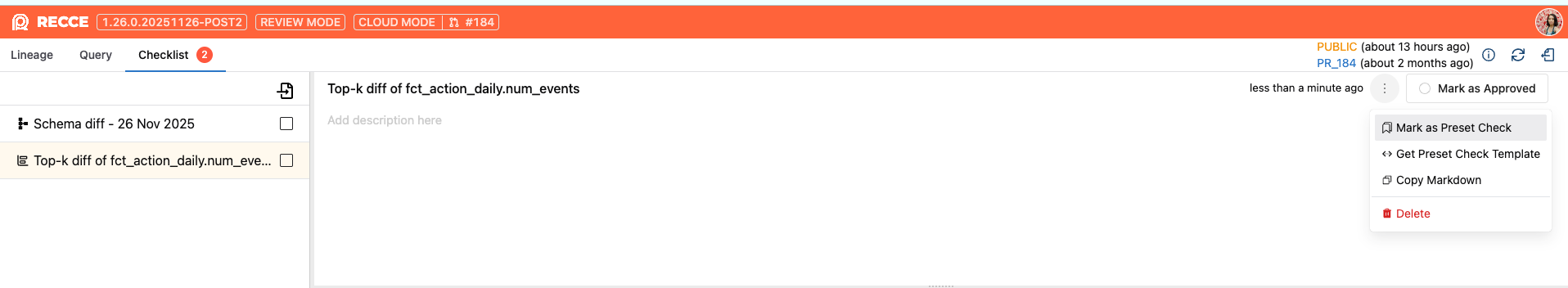

We build preset checks based on this concept. You mark a check as a preset check, and then it will be automatically run across every PR by integrating recce to your CI .

Preserving Knowledge in the Cloud

But there was a problem. We'd built checklists inside Recce instances. When the instance closed, the checks were gone. The knowledge disappeared.

This organizational knowledge should accumulate, not vanish. Senior data developers know what metric need care, which models are critical, which pitfalls are easy to fall into. We want other data engineers share these experience in no time.

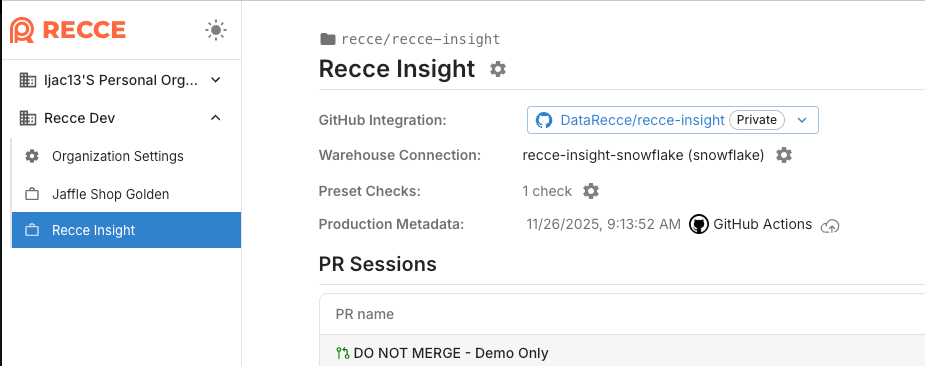

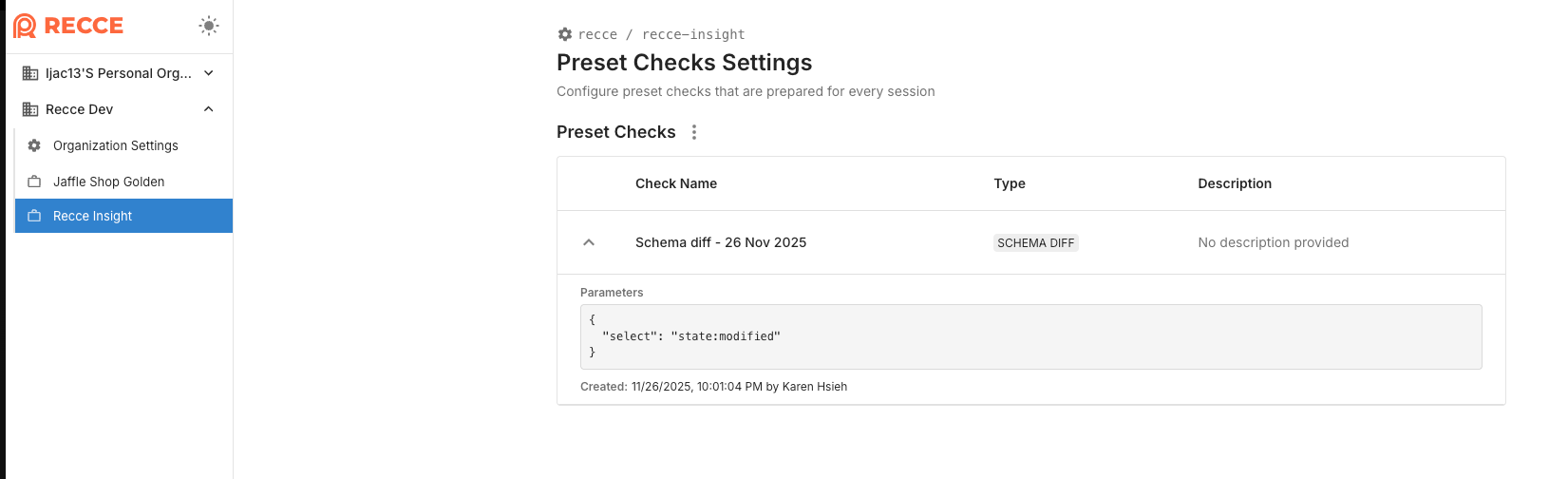

We are moving the checks out of the instances, starting with the preset checks. They now live in Recce Cloud, preserved at the data project level.

When you validate something on a PR and think "every PR should check this," you can mark it as a preset check.

Mark as Preset Check in checklist

See the preset checks of the data projects on Recce Cloud. As a data team lead, this is the safety net. No matter how experienced your team mates are, the PRs they create will run these checks.

See “Preset Checks” of this project

See details of each preset check

What's Next

The preset checks currently run when PRs are created. We're considering adding rules like "When X model is impacted, do Y check." Make the automation effective only when it matters.

Beyond rules, we're exploring how best leverage this knowledge in Cloud.

We're curious:

- How do you currently share your ad-hoc queries to your team?

- How do you ensure the junior data engineers or new-hires validate like a pro?

We're building this in Recce Cloud. If these problems keep you up at night, sign up for Recce Cloud and try it with your PR. We'd love your feedback: join our customer feedback program.